Will you approve this PR?

And other relevant questions for better technical screens

First off, Happy New Year and all that.

“Can you write me the pseudocode for a depth-first search?”

This was a question posed to me in February 2003 by an insolent twerp of a coder at a now-defunct outfit called Random Walk Computing back in San Francisco. I’d been laid off twice in a span of 4 months. This was smack dab in the ember-shower of the dotcom inferno that had rendered the city, especially its SoMa neighborhood, a glass and concrete wasteland rather resembling a corporate version of post-Aegon Harrenhal. RWC was one of those “what have I got to lose” interview calls I took, preparing as I was to leave the city for good. (I didn’t for another 3 years, but that’s a different story.)

Anyway, straightforward as this piece of code is, I froze for a bit, trying to rehydrate dim CS 410 memories from almost a decade earlier (the last time I had to code up a fundamental algorithm). All the while, Insolent Twerp kept peering at me with his weird green glasses and highly unprofessional schadenfreude. I came this close to responding to him with “Only if you write me the pseudocode for Dijkstra’s Algorithm while I do it, you bombastic asshat”.

I got the code, didn’t get the gig. Idiots. Good thing too, as the firm was devoured by Accenture a few years later.

That’s when I decided that if I were to ever own the engineering hiring process at any place, I would never ever ask an interview candidate live coding questions.

See, I absolutely suck at live coding questions. I abhor them with a crimson passion. No doubt in large part because I suck at them, but more importantly because I believe that as a screening device, they are patently useless. They screen for the wrong skill. “Thinking on your feet”, while impressive, is not an imperative for a good software engineer. What are we trying to do here - write good software or defuse a bomb?

Besides, this approach racks up an impressive count of false negatives, ultimately making it a losing proposition for the hiring company. To add to that, it fails to give the candidate a good flavor of what it’s like to work on your team, something I believe is a key goal of the interview process. All it does is deliver a momentary synthetic dose of imperious adrenaline to Insolent Twerp (who shouldn’t be on your team anyway), to say nothing of an equally synthetic dose of homicidal impulse to the interviewee. Basically, this thing below, down to the blue hoodie:

It’s also why books like these make me want to puke. I’m well aware this goes against the grain of everything Google and other haughty giants believe in, but really: there are better ways.

Better ways

So, what then, discuss the weather?

Of course not. Technical screens are critical. It’s just there is a lot more to the job than writing code. Coding, as I have often said, is the “easy” part.

My approach to this as a tech leader has been, at a 10K foot level, relatively simple: Create as close to a real-life scenario as possible in the interview process.

There’s obviously a bunch of detail to unpack here (which I will, shortly), but it doesn’t change the fact that unless you’re writing kernel code or a search library (or of course CS 410), scratching up DFS code is unlikely to play any active role in your real programming life. And even if it does, it’s unlikely you will be doing it at gunpoint.

Logically the approach is simply a reframing of Will they be good at doing the job they are being interviewed to do? But it goes further: it gets the candidate thinking Will I enjoy working here? Fits go both ways.

Let’s look at a typical Day In The Life of a senior back-end engineer at, say, a fin-tech startup:

0830-0900: Coffee, watercooler, email catchup (two emails in there about pending PR reviews), Ooh there’s donuts in the kitchen!

0900-0910: Pod daily standup

0915-1130: Working with teammate on a design for the new ledgering service. Meeting with tech architects tomorrow

1130-1230: (Alleged) lunch break, interrupted half-way through her fish taco at 1152 by the prod alert comes in: Web-hooks are failing on payment service listener, HELP!

1152-1311: Pair-troubleshooting with DevOps, devolving fish taco in hand, combing through Sentry logs to see what’s going on. Some array being indexed out of bounds. Code doesn’t look familiar, something weird going on.

1312-1320: Tracking down the PR that introduced that bit of bad code

1325-1400: Scanning the newly introduced code, working on hotfix (because bad PR author is in a different time zone and likely asleep)

1400-1420: Pushing hotfix to prod, writing post-mortem on issue, publishing to internal wiki.

1420-1430: A little venting on Slack, finish eating now-deconstructed fish taco.

1430-1450: Walking around the block to clear head

1500-1630: Back to ledgering service design - got the deck ready, shared with pod and tech architects

1630-1715: Meeting with pod lead and couple of stakeholders on some new features

1715-1745: Catching up with PR reviews, sent a couple back with comments

1800-1830: More email catchup (some gripes from marketing that new landing page doesn’t render right on mobile, need to look into that tomorrow). Wind down.

Not an atypical day. You’ll notice that outside of the hotfix, which fixed someone else’s faulty code, there was hardly any net new coding during the day, let alone searching depth-first.

If this is the role you are looking to fill, what possible earthly signal did that binary search pseudocode on the whiteboard give you on your candidate, aside from some solid insights on her ability to write in a straight line on a whiteboard, and maybe her grace under pressure?

Make it a real technical screen.

Keepin’ it real

Let’s try a more realistic spin. Drawing inspiration from Typical Day above, here’s a stab at a “real” technical screen for the above senior (L3) backend engineer candidate, framed as a set of technical challenges.

Challenge 1: Can you help automate our reconciliation process?

We do a nightly reconciliation of our ledger with the ledger of our partner bank. The bank sends us a CSV file with all their transactions. Our transactions are stored in our MySQL database. Here is the <csv-format> and <table-structure>.

Currently this reconciliation is manually done by doing an export from our table and comparing it to the CSV received from the bank. We need to automate this to improve our operating efficiency.

Can you build a service with an endpoint that takes the CSV, runs the reconciliation and generates a report that looks like <report-format>.

You can use any language and/or framework you prefer, though Java or Python are preferred. Please state any assumptions you make clearly and provide a README to build and run your mini reconciliation engine. Bonus points if you Dockerize.

Challenge 2: Will you approve this PR?

This one is particularly my favorite - hence the name of the post 🙂

Peer code reviews are a critical component of our shipping pipeline. Here is a Github pull request that was submitted by one of your teammates. The PR has some comments that attempt to explain what it is trying to do.

Based on the diffs and comments provided, will you approve this PR? If not, why not?

Throw in some obvious and some subtle logic flaws, bugs and other issues in that sample PR. Style issues, null pointers, race conditions, infinite loops, that sort of thing. For more senior roles go deeper: throw in potential performance issues (e.g. double recursion without size-checking), security issues (e.g. unrestricted doc uploads without mime-type or size checks, unsalted password hashes, etc.)

(If it’s a front-end engineer role, I may throw in a Challenge 3 as well)

Challenge 3: Did the FE get it right?

Two images of a new landing page we are building for our marketing team are attached. Image A is a PNG export of the design from our marketing design team, Image B is our front-end engineer’s realization of the design.

Did the engineer get it right?

(Aka, testing the eye for pixel-perfectness).

Finally (and this is key):

You have 24 hours to work on these. Please email your solutions to <insert-email-address> by <insert-date-and-time-here>.

In other words, it’s a take-home challenge, with no Insolent Twerp eyes staring at you while you work. The posted solutions provide both the gating factor and the baseline material for the onsite technical screen.

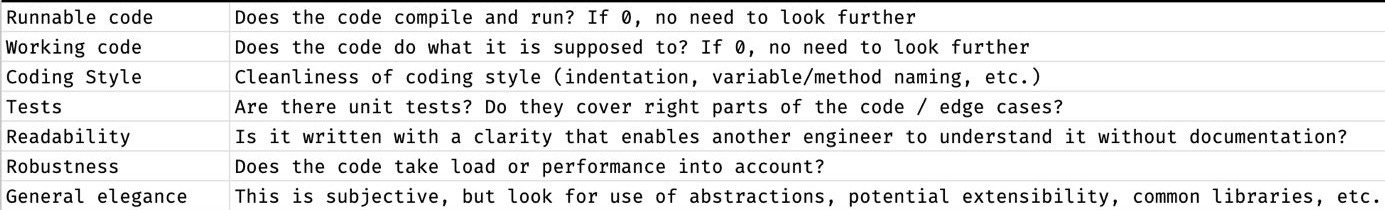

To round this off, create a quantified rubric for the evaluation of solutions. Here is one I have crafted in the past for instance, for a senior back-end role:

Rank each on a scale of 1-5. If the overall score notches 3 or higher on a scale of 1-5, that could be a signal to bring in for an onsite screen.

Onsite tech screen

If the candidate clears the offsite challenge, the materials submitted provide a great baseline for the onsite screen. We use the time to discuss their solution, “collaboratively” make improvements to the coding solution, discuss alternative approaches, dig deeper into the code review, and many other things, for instance:

Did she spot all the issues in the PR? How would she fix them?

How can we make the recon engine more scalable? What if the CSV is 22GB? What if other banks have different formats, how do we abstract this further?

You would not only get a sense of the problem solving and raw code skills, but a good flavor of how she thinks, how she interacts with peers, how she takes feedback, or gives it. In other words - not just how good an engineer she is but how easy (or not) she is to work with. Is she “one of us”? Do you see her on our team?

And best part: vice versa. She gets a feel for work on your team too. If you really want to hire her, this is critical and invaluable. You owe that to your candidate, and it can actually be a great selling point too if you have a good engineering culture (more on culture in a future post).

Aside: In our increasingly remote-friendly and distributed world, “onsite” is becoming more metaphorical than literal. Platforms like HackerRank and CoderPad help bridge this gap quite effectively. We used the former quite productively at Yieldstreet.

But ChatGPT…

How would this pass muster in this era of AI-driven code generation?

Fair question, and to some extent unavoidable. But given that the posted solution is not the end of the story, rather simply the entry point to the technical screen, any “cheaters” would be relatively easy to sniff out in the onsite tech screen, where we dig deeper into their solution live. It is not easy to comment intelligently on “your” solution if “you” didn’t actually write it.

The aftertaste

We instituted a close flavor of this at Yieldstreet to great success - I was proud of the caliber of the talent we assembled there, the bar we set was exceedingly high. So much so that we actually received compliments, even from candidates that didn’t make the cut, saying how much they enjoyed the interview process. That’s a good feeling, to have left a pleasant aftertaste for the candidate.

Only one thing can sour it now - not getting back to the candidate in time. I was militant about communicating our decision to the candidate within no more than 2 business days either way. It is in exceedingly bad and unprofessional taste to ghost a candidate after an interview. It is actually unforgivable.

Now, write me some code for a doubly linked list. Go!